Generative Pre-trained Transformer 2 (GPT-2) is an open-source artificial intelligence created by OpenAI in February 2019. GPT-2 can generate text outputs that sound human! GPT-2 was pretrained on general data from the internet and was done so to model the general distribution of human language from the internet. One of way of extending the functionality of GPT-2 is to finetune the model using a more targeted dataset. By showing the model a more focused set of data, one can steer the model to generate text that models the finetune training data more closely.

This has numerous benefits, most notably allowing different groups to modify the behaviour of GPT-2 to adopt regional vernacular or industry-specific language without training a completely new model.

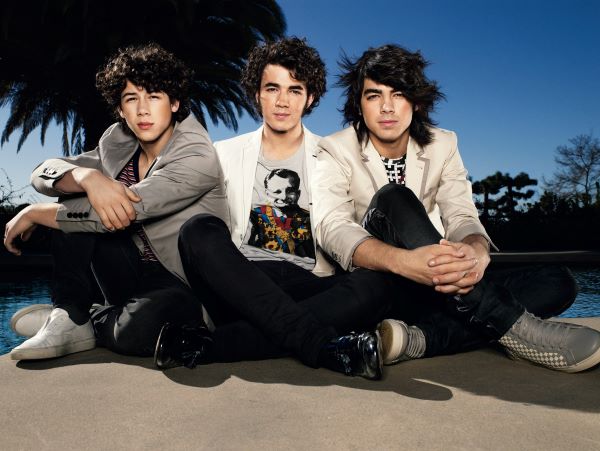

Today, we’re gonna get it to write pop songs like the Jonas Brothers.

|

|---|

| You know these guys, right? Of course you do. |

To accomplish our task, we are going to use minimaxir’s aitextgen package for Python. You can read more about it here. It offers a variety of useful methods that abstract away the complexities of the process so that we can focus on generating new lyrics! We are also going to take advantage of Google Colab’s free GPU access to speed up the training process.

For now, we want to find a dataset. In this case, a collection of song lyrics. Kaggle is a great source of public datasets for training your models. I chose this one. Most datasets require a bit of cleaning (processing) depending on the context that you are using the data for, but that’s a process that can be explained another day.

Once the lyric data is cleaned, we load it into our notebook and can already start training thanks to aitextgen. We let the model train and then download it to our computer.

Now all we have to do is make a barebones Flask app so that we can use our newly trained AI to generate lyrics on the web! You might have trouble finding a free host like I did though. Anyway, let’s take a look at the results.

They’re not perfect, right? But they’re passable, kind of cheesy and sentimental. Some attempts are worse than others of course. If I had to train another model next time, I would probably choose a more pop-centric dataset and spend a bit more time cleaning the data. Nonetheless, I feel happy about what I’ve done and what I’ve learned, and I hope you enjoyed reading about the process too.

See you guys in the next post!